Why OpenCUI

Chatbots, or agents, have long been recognized as powerful tools for delivering personalized service at every stage of the customer journey—before, during, and after sales. Since a chatbot can profoundly influence customer satisfaction, directly impacting a business's bottom line, the chatbot development platform has seen numerous offerings. Despite this, when compared to ubiquitous web and mobile app-based self-services, truly effective and usable conversational services remain rare. Why is this the case?

The problem with existing approaches

To be useful for a business, a chatbot needs to be built in three layers: the backend layer, the interaction logic layer, and finally, the language understanding and generation layer. The backend is typically shared with web and mobile apps to ensure a seamless experience, and large language models (LLMs) have become the standard solution for both language understanding and generation. The main difference between these chatbot platforms then lies in how the conversation flow, or interaction logic, is defined. They can be either flow-based or end to end LLM-based.

Flow-based: control but not flexible

Flowcharts are a standard tool for designing graphical user interfaces (GUIs) and have also been widely adopted for creating conversational user interfaces (CUIs). In a flow-based approach, interactions are defined as turn-by-turn sequences, giving developers precise control over the chatbot's response, thus makes it easier for the chatbot to achieve business objectives.

For GUIs, developers only need to define a limited set of pathways to ensure users can achieve their goals, as users can only navigate within the pathways provided. However, with CUIs, users can—and often will—deviate from these predefined paths. For example, they might change their mind about their departure date or first inquire about the weather before deciding what time to leave.

Unfortunately, when an interaction falls outside of the predefined flows, the chatbot simply doesn’t know what to do. This forces developers into a difficult trade-off: either attempt to cover an exponentially increasing number of conversational paths, leading to skyrocketing costs, or risk delivering a subpar user experience by neglecting critical conversational scenarios.

LLM-based: flexible but lack control

Large language models (LLMs), particularly those with function-calling capabilities, can be prompted to deliver conversational services, handle a wide range of user inputs. By simply describing what you want your chatbot to do and providing a list of functions, LLMs generate natural responses, using the appropriate functions as needed.

However, this broad coverage comes at the cost of control. In traditional software engineering, the runtime executes exactly as specified by the code. LLMs, however, are not always perfect at following natural language instructions. While it’s relatively straightforward to align an LLM-based chatbot with high-level design goals, maintaining consistency in finer details can be challenging—especially when juggling multiple perspectives. Moreover, when something goes wrong, there is often no clear or reliable way to diagnose and resolve the issue effectively.

Towards Full-Stack CUI component

A chatbot should handle all user queries relevant to your business, regardless of how they are expressed. However, for an external facing chatbot, the most important thing is to precisely follow its design to archieve business objectives, especially for high-impact use cases. Therefore, relying solely on prompt engineering to build interaction logic is not yet a viable solution, and traditional software engineering approaches remain essential for building chatbots with complete control.

While enumerating every possible interaction pathway from start to finish can ensure a reasonable user experience, it introduces significant redundancy and becomes prohibitively expensive as complexity increases. State machines, on the other hand, offer a more concise, factorized conceptual model for user interaction, with carefully defined states, events, and actions that conditionally trigger state transitions or responses. However, determining the correct states and transitions from conversations can be both daunting and error-prone due to the messiness of language and the inherent openness of conversations.

Type-grounded statechart construction

To provide a service to user conversationally, we just need to collect information needed for triggerring the corresponding API via conversation. This means we just need to construct an object for the corresponding function type, including values for all its parameters (or slots). For example, to selling a movie ticket to user, we need to build the following object:

{

"@class":"BuyMovieTicket",

"movieTitle": "Inception",

"showDate": "2025-01-10",

"showTime": "7:00 PM",

"numberOfTickets": 2,

"seatPreference": "Middle Row"

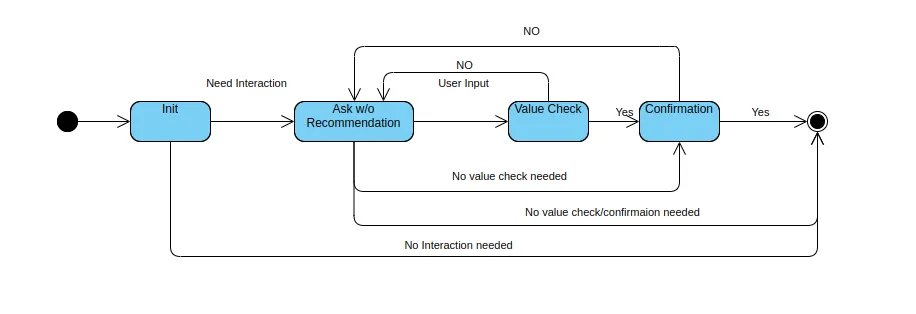

}Instead of manually designing the state space, we advocate for a systematic, type-grounded process. For primitive-typed slots, the interaction required to fill that slot can be modeled by a state machine with the following states (for demonstration purposes only):

- INIT: In this state, actions can be used to initialize the slot using values from the conversation history or the results of a function call. If there is a candidate value, we transit to CHECK state, otherwise, we transit to ASK state.

- ASK: In this state, we emit a prompt asking the user to provide a value for the slot. And events converted fom user input can trigger the transition to CHECK state.

- CHECK: In this state, the proposed value is verified, for example via an API call, to ensure it is serviceable. If checked out, move to CONFIRMATION state, otherwise, back to ASK.

- CONFIRMATION: In this state, the user is provided with an opportunity to confirm their choice. When such action is defined, ff user confirms, we move the next state, otherwise back to ASK.

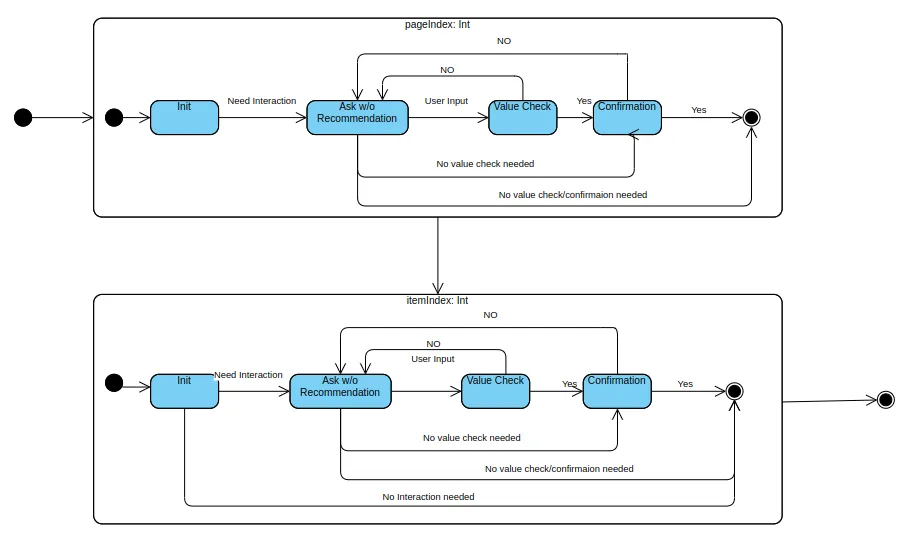

For slots with a compound data type, like "BuyMovieTicket," we need to use a statechart to model the interaction. A statechart, essentially a compound state machine, extends the basic state machine with the support for parallel and nested states. For example, to fill a slot of type "BuyMovieTicket," the interaction can be modeled with a statechart that consists of multiple state machines—one for each primitive-typed slot, such as "movieTitle" or "numberOfTickets."

If a slot is of a compound type, a nested statechart can be introduced to handle its interactions seamlessly. This way, statechart allows you to build complex behavior by composing simple building blocks. And this reusing the same design reduces chatbot development, testing, and maintenance costs.

The statechart by default follows a depth-first, start to end schedule. But this logic, and every aspects of the interaction, can be customized through action annotations attached to the corresponding states. For example, the CHECK state can be annotated as a no-op action or a conditional transition action moving state back to the ASK state if the value provided by the user is deemed unserviceable. The guards on the actions, or conditions, furhter increase the flexibility for this state based modeling.

Context Dependent Understanding

Dialog understanding was solved with shallow models. These models required large, labeled datasets, so building dialog understanding module can be very labor-intensive and time-consuming. Today, with the advent of large language models (LLMs), it is possible to achieve reasonable accuracy in dialog understanding under few-shot or even zero-shot settings.

While popular LLM-based function calling can be used to solve dialog understanding and offers a significantly improved developer experience, there are drawbacks when applying it in production. When dialog understanding module makes mistakes, there is often no straightforward way to fix them. Yes, fine-tuning can help, but it cannot be applied to correct a single data point.

Instead of relying solely on an LLM for dialog understanding, we adopt an agentic approach by decomposing dialog understanding into smaller tasks, such as intent detection, slot filling, and yes/no understanding. Each task is framed as an in-context learning problem, with in-context examples dynamically retrieved based on the user's query. If errors occur in understanding, the problematic user input and its correct interpretation are added to the retrieval index. This ensures that similar inputs in the future are matched with more relevant in-context examples, eliminating the need for frequent fine-tuning. Furthermore, these examples are tied to a particular context, ensuring they only affect the dialog within the same context.

Never Build Chatbot from Scratch

The component-based approach, characterized by open interfaces and encapsulated implementations, was designed to simplify the development of complex systems. While it has become a staple in modern GUI development, its adoption in CUI development—despite the greater complexity of conversational interfaces—has been notably absent, until now.

OpenCUI aims to transform the paradigm of CUI development by introducing CUI components as the core building blocks. Each component is defined by a type and its constituent slots at the schema level, accompanied by an automatically generated statechart backbone tailored to that type. This backbone can be customized using annotations that define both interaction-level behaviors (e.g., conditional actions for emissions and transitions) and language-level behaviors (e.g., examples for in-context learning). Along with the ability to interact with APIs natively at the schema level, these features collectively define how the chatbot systematically collects values for all slots of a given type through conversation.

For conversational designers and chatbot developers, OpenCUI enables a sharper focus on creating value by tackling application-specific challenges or crafting world-class components that can benefit a wide range of applications, instead of reinventing the wheel. For businesses, leveraging OpenCUI’s proven and well-tested components accelerates product delivery, reduces development and maintenance costs, and enhances both quality and user experience. So now there is no reason to build chatbot from scratch.